Workflow with TDD and AI Agents

Don’t Let AI Fool You

I have to admit, I was pretty blown away the first time I saw GitHub Copilot coding a full Kafka Streams topology in Java… just from the comments I had left in a Java class.

I was impressed. I thought to myself:

Whoa… this thingy is going to save me so much time coding for Kafka!

But, as with everything in life, you learn. And at some point, I caught myself thinking:

But, hang on, coding for Kafka is something I actually enjoy… why am I letting this agent do the part I love?

That’s just me, though. What about you?

Here’s the takeaway I’m bringing today for our ongoing journey into becoming AI Native:

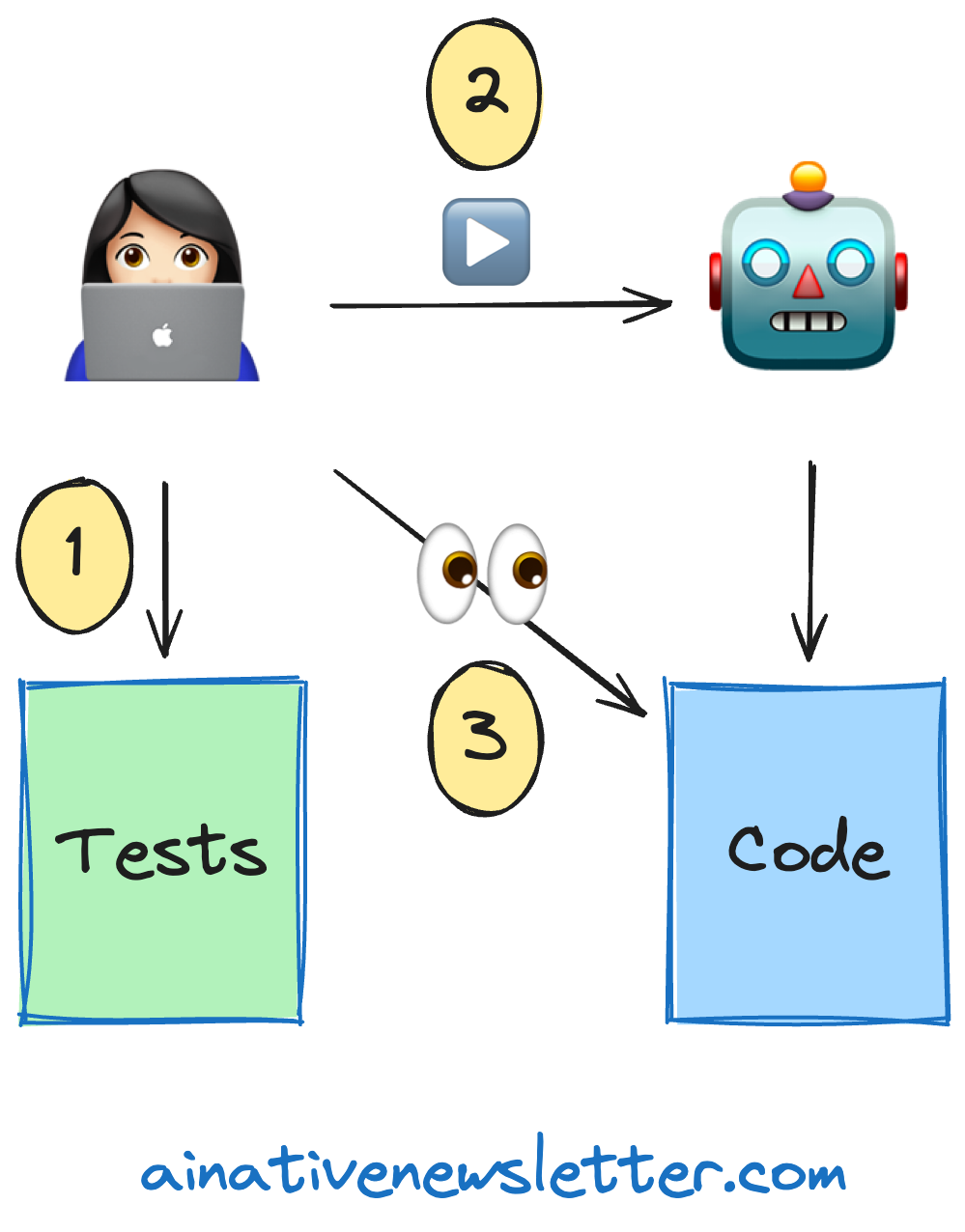

You write the acceptance tests…

Ask the AI agent to implement the code that makes those tests pass…

Review the generated code, then iterate.

Do you like today’s edition? Share it with your friends 👇🏻

Yes, I know. A lot of people love coding but dislike writing tests. I’m not sure I can convince those folks… but I can tell you from my experience: starting with tests improves your software quality.

When we start coding from the tests, that’s called Test Driven Development (TDD from now on), whose benefits I’ll share with you in a moment.

From my experience, using agents like Claude Sonnet 4 can get you about 75% of the way to code that’s mergeable in Git, which is pretty good. But that remaining percentage? That’s where the real difference is made.

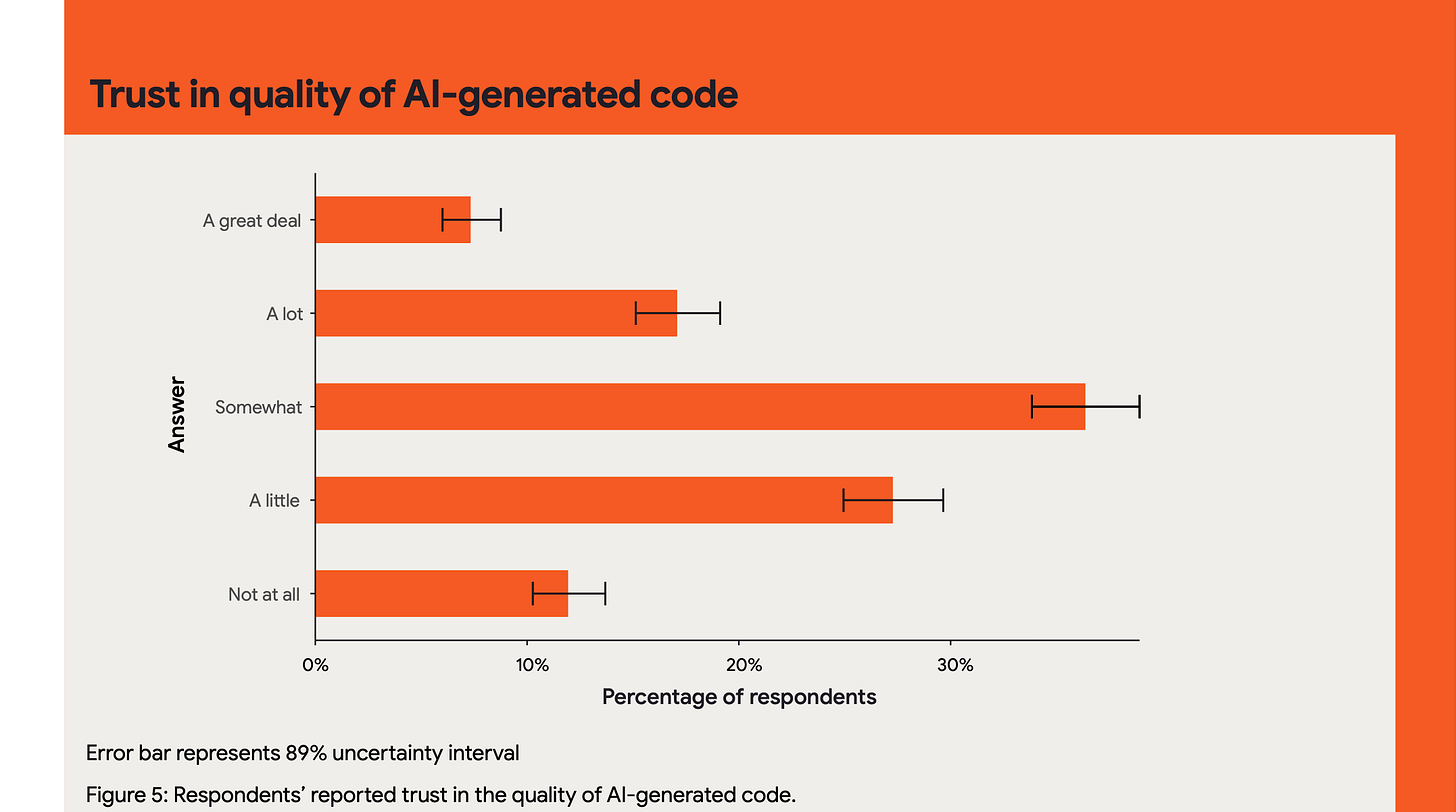

Don’t trust AI blindly—it will write code “as best it can” based on the instructions you feed it. Always review the AI’s code and tweak it to fit your actual needs and the proper quality. I stand by my statement, not only from my own experience, but also from others, as you can see in this graph taken from the State of Devops 2024. Quoting:

Participants’ perceptions of the trustworthiness of AI-generated code used in development work were complex. While the vast majority of respondents (87.9%) reported some level of trust in the quality of AI-generated code, the degree to which respondents reported trusting the quality of AI-generated code was generally low, with 39.2% reporting little (27.3%) or no trust (11.9%) at all.

But some of you might be wondering:

Marcos, why start with the tests when I could just work with the AI until it gives me both the code and the tests?

Two reasons:

1. We, as software developers, own the “what it should do.” We’ve decoded the product specs from the Product team and we know exactly what our application must do.

The way to implement a solution with code might differ—each of us interprets specs in our own way, and AI is no exception.

You are responsible for how the app or feature should behave. AI is not trustable. That’s why you need to write the acceptance tests to make sure of it. By doing that, you’re laying down the rails for the AI to implement the right code when you ask it to.

2. TDD has many benefits for our software, whether we like it or not. I’m not here to list every single benefit of TDD, but here are a few taken from various Dave Farley posts:

Fewer bugs.

More flexible code.

Better design.

Remember the workflow:

You write the acceptance tests…

Ask the AI agent to implement the code that makes those tests pass…

Review the generated code, then iterate.

What do you think of this workflow? Want to tell me how it went for you? If so, send me an email at hello@ainativenewsletter.com or click this link 👇🏻